Qianyi Chen (Daisy)

MDes Fall 2022

Interactions for Dynamic Blending Virtual and Real Environments in Head-Mounted Displays

Introduction

Existing works have been studying how can the physical world be integrated into the virtual world in MR systems, including different aspects of the blending, ranging from usability on different tasks (McGill et al.) to rendering approaches of objects and environments (Budhiraja et al.). However, only a few works shed light on how the blending can be customized.

Therefore, our work addresses two questions through a series of design explorations:

RQ1: How might we design the interactions for users to customize the passthrough of the real world in a virtual scene?

RQ2: How might we provide support for the customization process to balance the freedom of customization and the cognitive load?

For the scope of this thesis project, we focus on the use cases of blending for individual tasks and implemented several high-fidelity demos in Unity as proof of concept. Therefore, our work can be extended in future work that explores the technical aspect.

Process

This project comes at the perfect timing when the technical difficulty of building an MR system has started to become lower for designers and the problem space still has much room to explore. The advances and upgrades in hardware lower the barrier for designers to prototype experiences that blend the realms of physical and virtual.

In the early stage of technical investigation, we explored prototyping tools for low-fi and mid-fi, including ShapesXR, VRCeption, and SparkAR.

Overall, the low-fi technical explorations allow us to form an initial understanding of how a blended environment may look from a visual perspective. In order to test out the interactivity, we moved to the next stage of mid-fi prototyping using SparkAR. Among the list of interactions we’ve brainstormed, we pick the “torch” metaphor for the demo. The idea is a passthrough based on the head position. In the SparkAR demo, we were able to demonstrate an interactive scene with a reality torch, which casts a shadow on digital surfaces and reveals reality.

Conclusion

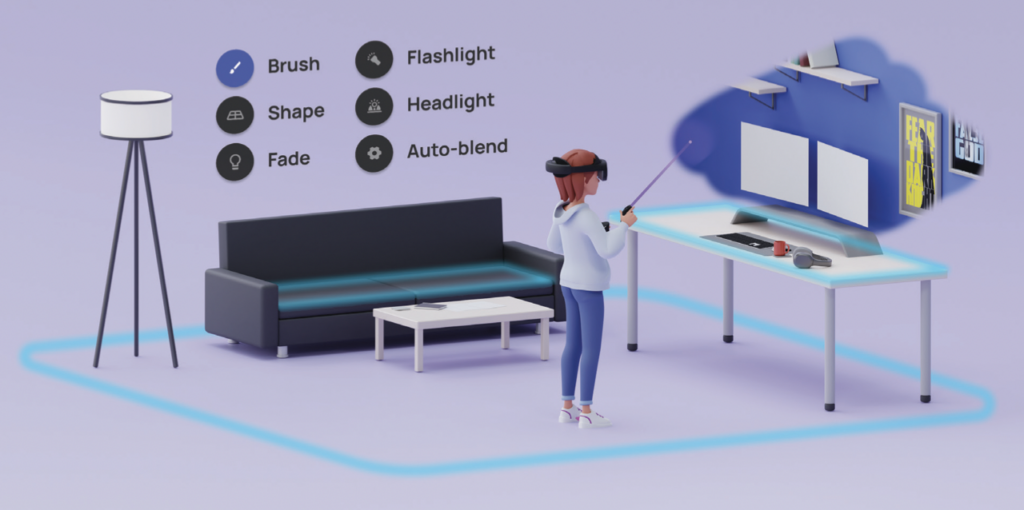

Our final design is demonstrated through a high-fidelity MR application prototype running on Meta Quest 2 and Quest Pro. The core of the application is an interactive MR workspace loosely resembling the room where the users are physically situated. In addition to completely virtual content, users can map out real-world objects upfront, such as desks, couches, walls, doors, and windows.

The prototype was implemented on Unity using the Oculus XR Plugin and SDK with OpenXR backend. We have chosen Meta Quest 2 and Meta Quest Pro as our testing devices due to their availability and community support, but the experience can be ported to any 6DOF MR headsets that support passthrough, spatial anchors, and controller tracking.

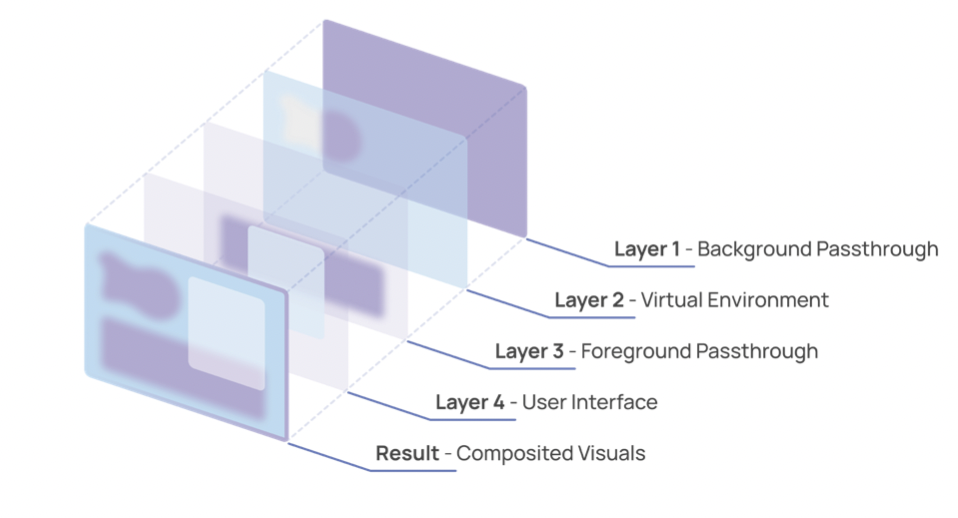

The MR workspace is a customizable 3D environment that blends physical and virtual worlds. The intermixing of the two worlds is achieved by compositing different layers together. The position and opacity of the content in each layer contribute to the overall blending of the scene. These layers can be conceptually categorized into four types based on their rendering priority in the depth buffer.

Full listing